Reasoning under constrained intelligence: a simple Bayesian model with rejection

Do stupid people just ignore evidence they can't understand?

For a while now, I’ve been bothered by the apparent ability for the majority of people to have nearly everything important I say go in one ear and out the other. I have unconsciously assumed that this is merely defiance; they surely understand what I say, but don’t like it and therefore choose to ignore it, committing a humongous epistemic fault in the process.

I have assumed this because they don’t do what I would expect if they didn’t understand. My naive expectation is that they would communicate that they are struggling: “I don’t understand, can you explain this more clearly?” And if I try, and they still don’t get it, I expected that they would say something like, “I don’t think I’m going to understand this now, I might need to study it. I was never the best with statistics. So perhaps I should withhold my own judgment on the topic.”

But maybe the very act of admitting you don’t understand takes an above average amount of intelligence or character; in my expectation, I am projecting, but this is dumb when you have a very rare psychology. Dumb people who tend not to understand a lot of things may think they understand them, but because they actually don’t, their priors simply don’t change. They think they’ve genuinely found the evidence to be irrelevant, when it’s not, and the reality is that they don’t understand. This would implies that dumb people tend to ignore evidence they don’t comprehend, while explaining why they don’t admit that they don’t understand in a clear and public way.

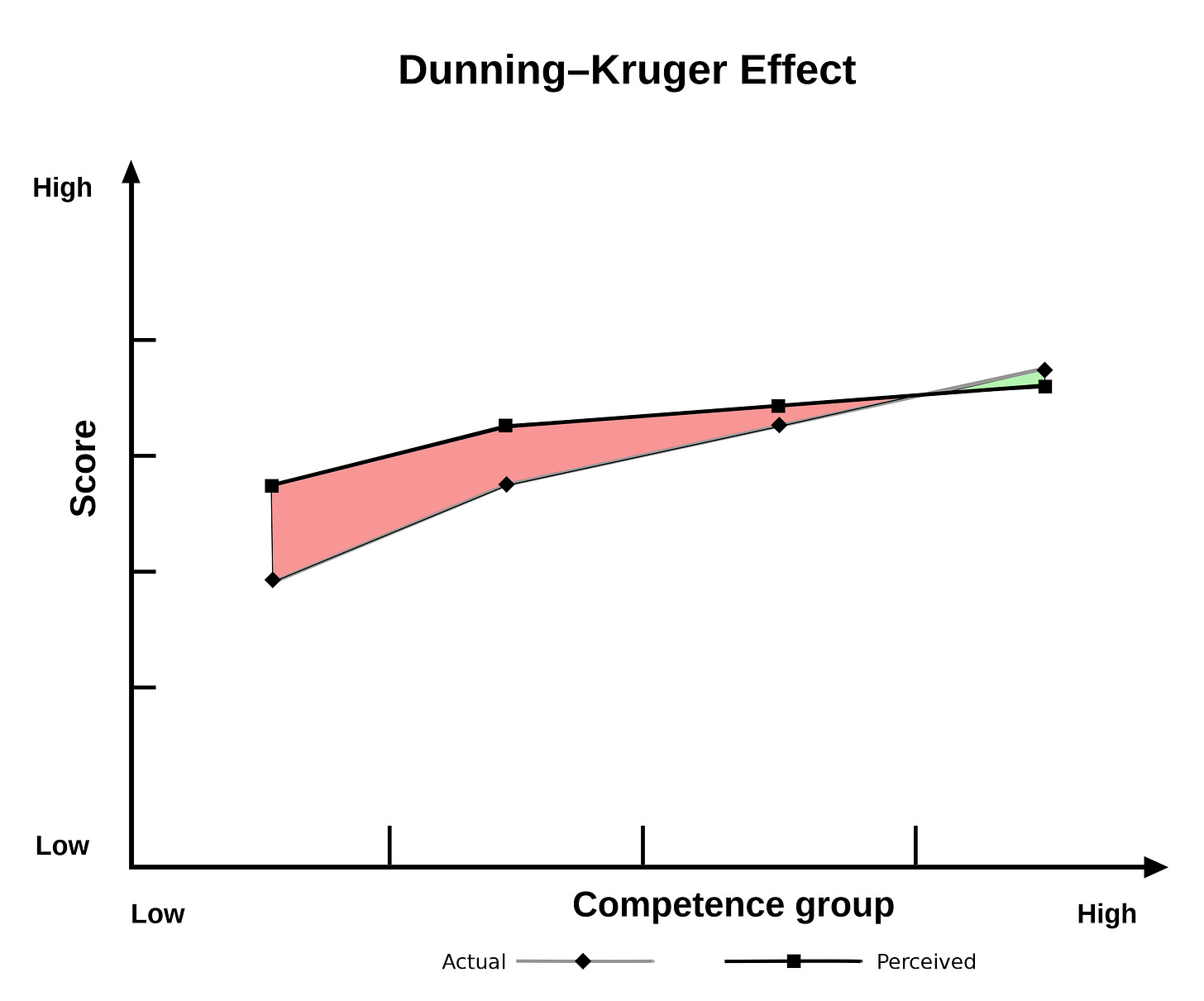

There is a well-known finding in psychology called the Dunning-Kruger effect that lines up with this model. High competence understanders have the most accurate view of their competency. Low competence understanders think they understand a lot more than they do, to the point where the perceived gap between low and high understanders appears to be about 10% of the size of the real gap. They are off by potentially a factor of 10. That is huge; that’s the difference between $30,000 and $300,000 in my bank account. They think they have $270,000 while I have $300,000 when they actually have more like $20,000.

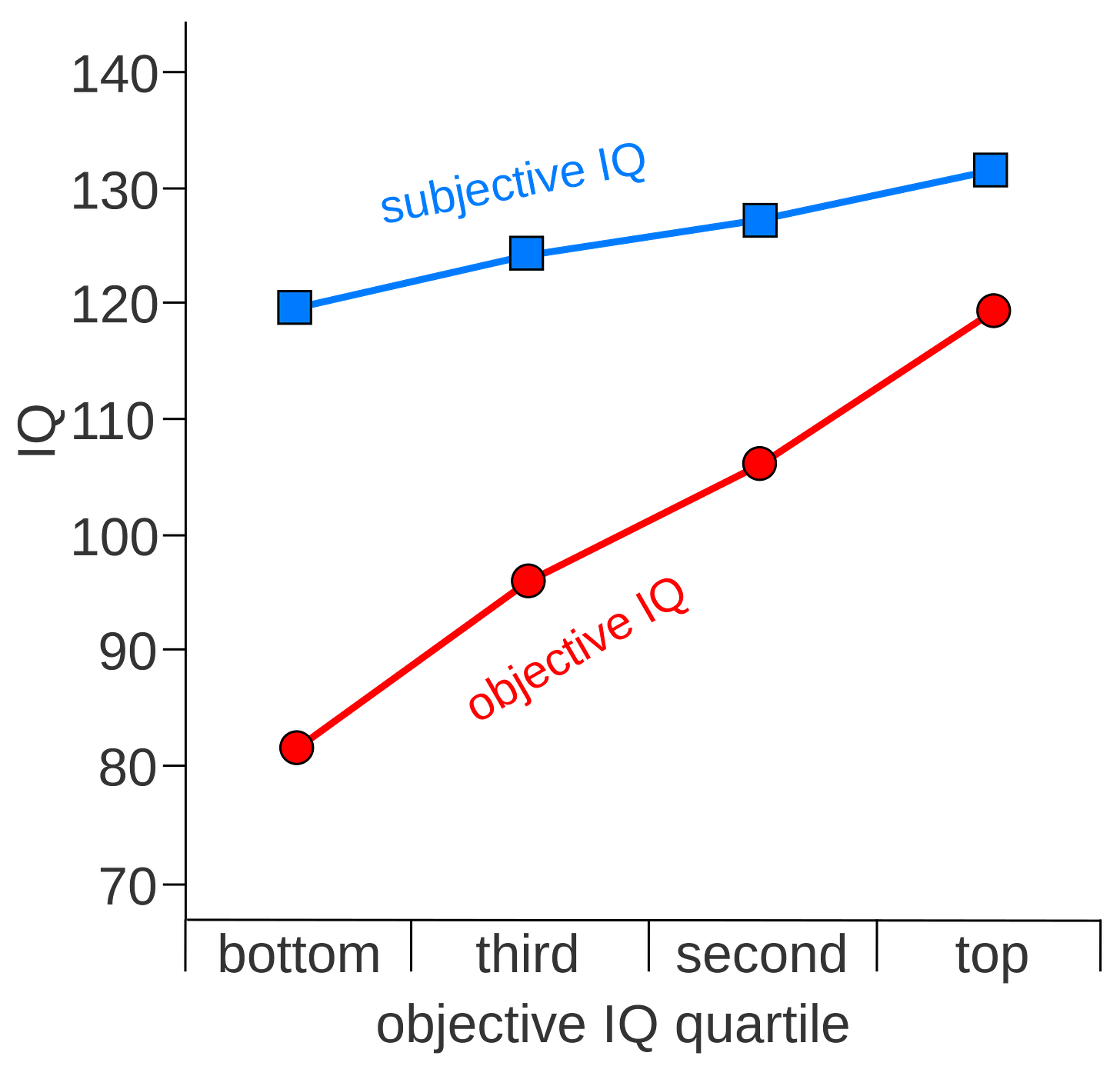

More interestingly, everyone seems to think (assuming the simulation axioms are legitimate) that they have IQs above the political agency threshold when in reality that’s about 5% of the population, at most. In other words, they think they can think for themselves, when in reality they can’t. They must have been told that they are special by the TV!

Chat about politics with GPT or someone at or under GPT’s IQ and they’ll repeat news media talking points. If you point this out, they’ll tell you with utmost conviction that they thought of these things themselves, that they have been saying the same things since the 90s (about masks and gay marriage and transexuals surely!), etc. They are 90-110 IQ in all likelihood but think they are beyond the political agency threshold of 120. They have $30,000 to your $330,000 but think they’re resting comfy with $300,000. They don’t see that you outrank them by a factor of 10, they are convinced they’re right up there with you.

And so you get no respect. When you say something, and they don’t get it, they think they do get it but don’t see the connections. And so, instead of deferring to your judgment, it goes in one ear and out the other. They have no idea how incompetent they are.

This could be, as I hypothesized above, because self-awareness is g-loaded. But it could also be due to the character-depravity of the average person. These hypotheses are not mutually exclusive of course — it’s probably both. The inflated competency assessment might be due to a combination of ignorance, ego, ego-fueled performative ignorance, and ignorance-fueled ego.

In exousiology there are three pillars of mass behavior that are developing: materialism, hedonism, and narcissism. The average person has crass tastes and wants stuff, putting little value on truth and beauty; he wants constant candy for dinner, becoming obese and engaging in high time preference behavior; and he believes that he is equal, he demands catering and will make no sacrifice for the greater good. These pillars explain why, as life gets easier and the elite don’t have to be as elite in character, things become ugly, degenerate, and egalitarian. Average people have temperaments which are ugly (materialistic), degenerate (hedonistic), and narcissistic (egalitarian).

It would be odd if the Dunning-Kruger effect didn’t relate psychologically to the mass egalitarian gaslighting over group differences in competency. We accept that it is egalitarianism when people insist that, e.g. Blacks or women have the same distributions of intelligence as white men when in the same environments; surely egalitarianist gaslighting is also a part of the picture when low competence individuals rate themselves bizarrely well.

The model

The model is like this: when someone is presented with a proposition P_n, they assimilate it into their known propositions P_1, P_2, … , P_n. They attempt to compute P(Theta | P_1, P_2, … , P_n) = P(Theta | P_1, P_2, … , P_n-1) P(Theta | P_n) using their best knowledge of P(Theta | P_n) = P(P_n | Theta) P(Theta) / P(P_n). But they can’t compute this if they can’t understand P_n —otherwise, how are they going to estimate P(P_n) or P(P_n | Theta)? They have no idea what to make of it. It doesn’t seem related. It doesn’t change their priors at all. Maybe they get a 0 value on the bottom in there because they don’t understand how P_n could be the case and they feel an error and throw it out, and hide the error because they’re a narcissist. Maybe they get a 1 value because they really don’t see the pattern, they don’t see how the evidence is related to Theta, the parameter of interest (such as the heritability of the BWIG). Either way, it goes in one ear and out the other.

You could say P_n is too high IQ for someone who doesn’t get it. Different P_i’s could be modeled to have different complexity levels, making them accessible to different kinds of people.

If the evidence is ambiguous beneath the 130 IQ level, and the Patriciate lies, then 98% of people have a bunch of ambiguous propositions plus a consensus-proposition, the proposition that all of the smart people you see on TV have reached a conclusion about the evidence you personally have uniform priors on. Obviously, the average person will tilt their priors toward this consensus.

When a 130+ IQ HBD debater on the internet busts out 12 studies all containing statistics that 75% of people can’t learn (basic AP Statistics stuff), the average person has no idea how to compute P(P_n | Theta), so they throw all of it out and say something like, “how can you know all this, how could all of the experts be wrong? You’re not an expert, you must be like me [egalitarianism], our competency doesn’t differ much. I don’t trust you.”

The only thing they can understand is that you think they’re wrong. But you seem to be in the minority of smart people, and the majority told them that this might happen with racists. So they’re true priors don’t change much. And we haven’t even addressed the capacity to lie under type II controversy conditions. When telling the truth is costly, and one has a materialistic tastes, why tell the truth? Truth isn’t worth any cost, when your tastes are crap and ugly. Average people, if they are materialistic, which seems to be the case, will therefore lie under conditions of type II controversy.

Whether or not they reject updates of priors based on the projected costs of such an update is an interesting question. On the one hand, we should expect that it would be maximally beneficial to know truth and to simply be able to lie efficiently as needed, while making good personal decisions based on the correct information. This appears to happen with stuff like white flight. The priors system and the lying system may both be unconscious, and the conscious may only access the lying system verbally in those prone to lying for material reasons. The correct priors system may be expressed in feelings of fear or other feelings when making decisions. But none of this is necessary, people could just be skilled conscious liars, if it is adaptive. Many, like Robin Hanson, seem to always rush to deny this without much evidence to the contrary, but people will tell you to your face, ironically, that they don’t value truth much, and would lie for personal gain, including to fit in and make others feel good.

Knowing truth, whether unconsciously or not, should be maximally adaptive. If there is a wall in front of you, and it becomes politically expedient to lie about there being a wall, you want to be an organism that both lies about the wall and knows there is a wall there. Otherwise you might drive into it and die. You want to drive around it, and when asked why, say because you prefer the scenic route, but have no problem with driving through the place where there is totally not a massive wall. You want to not live around Black people while stating that Black people are totally equal and you have no problem with them. You do not want to end up in a Black neighborhood at 3 AM unarmed because you think Black people are equal; that’s like driving into the wall at 60 mph. Therefore, we should not expect social cost to be considered when changing priors; there should be a parallel lying system, in an adaptive man. Although since evolution is not perfect, this does not have to be the case.

Properties of the model

Whatever the underlying cause may be, a model where propositions are essentially ignored when they are not understood has some interesting properties that seem to relate well to the real world. For one, it can explain why it could be non-wasteful for HBD research on the black-white IQ gap to continue, even though it was always obvious for all of human history that it’s genetic and the data has been clear since the MTRAS. If understanding all of the evidence is too hard for 95% of people, that evidence is useless for demagogic purposes. If elites are committed to lying, the evidence is basically politically useless; the distribution of memes cannot be effected with that evidence, and so political behavior cannot be changed through memetability.

Developing lower IQ evidence that can be understood by large fraction of the population, such as Finding The Genes in a single study, does open up potential of demagoguery, the seizure of The Hammer from the Patriciate.

Furthermore, watering down evidence and focusing more on easy to understand evidence when engaging in mass arguments should be more profitable than attempting to “Euler” people with evidence they probably won’t understand. Don’t be TPO! It may be profitable to slowly explain basic statistics in a way that a lot of people can understand, since the vast majority of people aren’t even familiar with correlation or linear regression. I can say with certainty that my college educated, professional parents cannot explain variance or correlation at all. They are at least 1 SD people.

I don't think people use Bayesian inference at all; I am sure you are familiar with the work of Daniel Kahneman and Amos Tversky, where they tested exactly this and found that people's answers are not at all consistent with Bayesian reasoning, and instead are consistent with the use of simple heuristics. Even the critics (E.G. Gigerenzer) of their findings within psychology do not believe we use Bayesian reasoning, they just point to other heuristics. Maybe you have already considered this, and think they are dumb.

"Whether or not they reject updates of priors based on the projected costs of such an update is an interesting question."

I do think this is the case, and there are both cognitive and emotional costs to updating priors. The cognitive cost is is the cost of updating your world model given the new information, which can potentially result in cascading changes when the new information makes the model internally inconsistent. For instance, if you used to believe that women are equal to men, and in light of new evidence you now believe that women are substantially different from men, you then need to reassess your beliefs on the wage gap, which may then lead to reconsidering the role of women in the workforce, etc. Given how the mainstream world model is basically inverted, one small change in beliefs can necessitate a huge cascade of changes to keep the model consistent and reduce cognitive dissonance. This makes the “activation energy” for accepting non-mainstream beliefs very high for most people.

It’s also possible that the new data suggests a new possible world model, but doesn’t provide enough evidence to justify accepting it over the existing model. Then you would need to keep both models in mind when evaluating evidence, eventually accepting the new one or keeping the old one once it is clear which one is better. This is time and energy intensive, and requires a high IQ to begin with. This is why Kuhnian revolutions are generally the product of a single person who has taken the time to come up with a new model. Everyone who comes after simply accepts the new model and works within it (of course this is a simplification). See Max Planck’s quote about science advancing one funeral at a time.

Then you have the emotional cost. I think Peterson is basically right when he says that people live within a narrative that they tell about themselves, comprised of their objective world model, their value hierarchy, and their accomplished past and projected future. Changes to this narrative can be very stressful, and if the change is large enough it can completely destabilize someone’s identity. It’s fundamentally the same reason that people ignore evidence that their partner is cheating on them, even when it’s obvious to other people. If you are a standard issue Leftist then accepting evidence that points to the fact that the Black-White achievement gap is due to genetics can blow up your entire identity. Unless you have a fundamental commitment to truth (which I agree the vast majority do not have) you’re not very likely to pay that price.

“Knowing truth, whether unconsciously or not, should be maximally adaptive. If there is a wall in front of you, and it becomes politically expedient to lie about there being a wall, you want to be an organism that both lies about the wall and knows there is a wall there.”

Crafting a lie to fit with a given ideology is cognitively expensive, and most of the population is probably not capable of doing that on a regular basis. Someone who is speaking honestly will simply generate an utterance based on their genuinely-held worldview, but someone who is lying has to simulate an ideologically-compliant distribution and then sample from that. This would be why individuals and populations that specialize in lying skew towards higher verbal IQs. Repeatedly lying can also have the effect of causing you to believe your own bullshit, which I think is an adaptation to reduce the cognitive cost of lying. Consequently, I think this is only practicable for people with >115 verbal IQ and a particular temperament (probably high in dark tetrad traits).