In the ancestral environment there were no abstract disciplines with vast bodies of carefully gathered evidence generalized into elegant theories transmitted by written books whose conclusions are a hundred inferential steps removed from universally shared background premises. - Eliezer Yudkowksy

You are likely to be unfairly biased against genetic hypotheses. A few months ago, I ran a small survey asking people whether they thought key human differences and changes were due to genetics or “culture.” I was expected to find differences between leftists and conservatives, with leftists more biased against hereditarianism. Instead, I found hardly any variance at all.

What’s even funnier is that culture hardly means anything at all. There are 160 different definitions of it. Because of this, I promote tabooing the word “culture”, meaning we should delete it from our vocabulary and instead use other, clearer words to describe the 160 different disjoint concepts it might mean.

The 160 different meanings can be factor-analyzed down to 3 major factors, however. These are culture-as-information, culture-as-phenotype, and culture-as-genotype. Culture-as-genotype differences is the same as genetic differences; culture-as-phenotype differences is a superset of genetic differences, and it is a category error to juxtapose phenotypic differences with genotypic differences; culture-as-information differences properly contrasts with genetic differences, so we conclude that if the respondents meant anything coherent, they meant to say that informatic differences explain continental economic disparities, meaning the populations have the same economically relevant genomes, but have access to different economically relevant information.

Is that plausible in the age of the internet? No.

Are you mentally recoiling against the statement you just read? You have anti-hereditarian bias. Don’t believe me? Let’s try a thought experiment. Imagine you live 10,000 years in the future and you come across an alien planet with two populations on two different continents. A fair prior is that there’s a 50% chance it’s mostly due to genetics, a 50% chance not.

What does an unbiased lay observer see after arriving, and how should this affect his priors? First he sees that the populations are visually distinct for genetic reasons. Now, being unbiased, he’s 75% genes, 25% not genes. Then he sees they both have extremely similar education systems structured around the same subjects and information transmission times (everyone spends all their time in these systems until they’re about 2 decades old). 95% genes, 5% not genes. Then he finds out that for generations they’ve had open, easy travel between their two continents (airplanes). 99% genes, 1% not genes. Then he finds out that for over a generation all knowledge has been able to be transmitted at the speed of light between the two continents, and all members of the populations have free and total access to that knowledge at any time they want, and they can instantly communicate between continents using this system for free (the internet). 99.99% genes.

As an unbiased lay observer, what other justified information would you have? None, this is why all the unbiased lay observers in history were hereditarians. When people resist this kind of reasoning, they have to lean on complex counter-intuitive arguments, such as “race is a social construct.” But you’re an unbiased lay observer, so when you see clearly different skull shapes, heights, and skin colors, you don’t think “social construct.” You think “dog breeds.” These are furry, grotesque looking aliens here anyway. You don’t have a “humanitarian bias”. When we make the education comparison, this is resisted by people talking about “school quality.” But as a lay observer, you don’t have access to data on school quality. You don’t have any justified reason to think that “school quality” differs in any meaningful way when both populations spend equal time and have access to the same information in the schools. As for the internet, to reject this point you have to be inculcated with some sort of weird, counter-intuitive Freudian non-sense about habitus and implicit learning or something. This is not justifiable without rigorous data (which doesn’t exist), and at that point you’re not a lay observer and we can just get into IQ and Smart Fraction Theory and twin studies, which show what is obvious to lay observers.

What is the point of this? I’m attempting to make the following argument:

An unbiased lay observer would answer oppositely to the participants from my survey

A truthful expert would do the same, because the data confirms it’s mostly genetics

Therefore most people are biased against genetic hypotheses

But we have an issue here: how do I demonstrate point two without making you an expert? I don’t have to. I just have to show you that the cause of your deviation from unbiased common sense is not expertise. If you are not already convinced of point 3, you probably think that you have some non-trivial information which provides evidence against the genetic hypothesis and which elevates you above our lay-observer. But under point 2, no such data exists. Group differences in genetics is an awesome example for employing Bayesian reasoning to demonstrate bias, because it can be cleanly modeled as a parameter that we can estimate from data, just like the mean height in a population.

If you come away with bad posterior for height, a few things could have happened, assuming no fraudulent data:

biased data: you sampled height from the NBA thinking it was the general population. This is like getting all your news from MSNBC. Have your opinions on culture been formed by overwhelmingly biased sources that only show you a subset of the data? Did you learn about “culture differences” from anthropology professors 20 years ago? Throw all of that out, you’re better off restarting at a neutral prior like our layman observer had.

reading error: you randomly add a few 0s to each height. Oops! Your mean is totally off now. This is analogous to having no idea how to interpret the data you’re getting. Laymen who see something like this might update improperly. In particular, I call interpreting a study that shows that school can explain 3% of a gap as good evidence for culture effects the “effect size fallacy.” Culturists will say “there is an effect” while leaving out the fact that it’s miniscule (and not all causal!). It’s as if 3% goes to 30% in their minds, due to instinctive bias (something like a coding error in your updating procedure) or because they know very little about the problem domain (like misreading a height that says 60 inches as 600 inches because you have basically no idea how tall people should be. This is like trying to interpret the literature without knowing the first thing about twin studies, heritability, plausible effects, statistics, etc, which I’m sure plenty of people do given how many times people who should definitely think of themselves as laymen gesture towards stuff like what’s in the link).

Petrified prior: you start your estimation off with the assumption that the data is Dirac-distributed as 100% culture, 0% genetics. In other words, you’re just instinctively biased and will ignore anything you don’t like, while accepting that which you do. If this is you, this article won’t do anything for you, so we’ll assume you’re 1 or 2.

Now. If you came in here with culture bias, and agreed with the layman example, and now you think you probably had biased sampling or totally misinterpreted some data, you should now be excepting that YOU were unfairly biased against the genetic hypothesis and that by extension most people, not being any more of an expert than you, are also biased.

The last objection that I foresee is that, while you’re not an independent expert, you trust your data source to help you read the data properly and to give you an unbiased view.

I have an argument that, in any society where this is a widespread attitude, knowledge will be biased to an extent similar to that of the epistemic bias of medieval fundamentalism or ancient paganism.

Science and math and the blockchain of knowledge

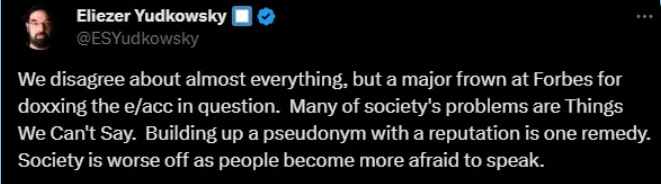

I read this tweet and something stood out to me. The emphasis on “reputation.” This irked me for some reason. He didn’t say building a pseudonym with reach. He didn’t say building up a good body of proof for things we can’t say … he said building up a pseudonym with “reputation.” This implies that, to Yudkowsky, reputation, or trust, is the basis of knowledge.

In other words, Yudkowsky is what I would call a trustor. When he receives a claim, he evaluates not whether he has also received sufficient direct evidence for it — rather, he evaluates whether the sender of the claim is generally “trustworthy.”

This grossed me out a bit because I tend to do the opposite. I tend to hold null opinions on topics that I don’t process the proof of myself.

But this is evolutionarily novel. In the distant path most actionable knowledge was just individual experience, and there was no video to back that up. The testifiers of this information had to simply be trustworthy by reputation.

Obviously, a system of knowledge where the only evidence is the reputation of the claimant is deeply flawed and led to all sorts of famous misunderstandings, from Aristotle’s biology to geocentrism to four humors theory.

It is notable that in modern mathematics, reputation is useless. Any claim is to be accompanied by logical proof. Any node in the belief network is expected to be able to process the proof themselves and accept it based on its merits. Science is similar. With a single assumption, that of no fraudulent data, science is completely decentralized. All scientific claims must be accompanied by their data-based inductive “proofs”, and from this the truth can be arrived at with a certainty varying with the inductive weight of the proof, which is determine by things intrinsic to the proof like sample size, problem coverage, and so on.

If you relax the no-fraud assumption, as long as you know the fraud rate, and know when data sources are independent, you can, through sufficient replication, weed out the fraud without any reputation or credibility involved. The real-life fraud rate seems to be pretty low, and replication is generally high, so modern science is trust-free. There should be no “trusting the science.” This is another way of looking at the empirical/non-empirical divide of “science” and pre-science. All substantial claims about the world are in a sense empirical. Science is not only empirical, it is secure and decentralized, where “proof: I’m a trusted authority and I said it, so believe me” is centralized and vulnerable.

But what happens when you do have people “trusting the science?” What does this mean? Generally, it means they form strong opinions without actually fully processing the proofs themselves. Instead, they decide based on the reputation of the claimant.

But this is not science at all! This is indistinguishable from a centralized, vulnerable, ancient faith-based belief system.

If you have a knowledge network, an ancient prescientific system has a few central authorities and a bunch of trustors. Full science is decentralized; all nodes are proof processors and don’t exchange any reputation signals. The truth-accuracy of the latter network should be higher than the former because it is more secure. So what happens when you add trustors and authorities to the decentralized network? If you add a few, not much. If they become 99% of the network, biases will be amplified. The network becomes no better than the ancient one. It’s not “science” at all.

Returning to culture bias, did you go on Google scholar and process the data yourself? Are you an expert? Or did you get influenced by Chris Rufo, James Lindsay, or some other persona? If you trusted, and you know a lot of people who are trusting, you’re going to see a level of truth similar to Aristotle’s physics. Doesn’t matter if the central information nodes claim to be scientific. If you aren’t independently verifying this, there is widespread, systematic error.

Sometimes, you may trust someone once, even a number of times, and find that the knowledge they give you pays off. In this case, there is no long-standing trust at all. You are actually an expert because you know what worked for you really does work. But with culture bias, there is no test. If there isn’t instinctive bias against genetic explanations, there most certainly is widespread, blind-faith trust in a few centralized nodes that never have to make their rocket ships fly. And insofar as the BS in tested it never works — if you’re paying attention you know that blank-slatist interventions always fail. Developmental economics doesn’t work, because it’s blank slatist. That includes for South America, Africa, and US Blacks.

At this point, I hope that you are a layman and not a trustor. Next time, we will discuss the genetics of the rise of leftism with the layman in mind. Would an unbiased layman find the genetic hypothesis for the rise of leftism plausible? Or are there reasonable, common fallacies he might fall into? Don’t miss it:

One of your most well written pieces in my opinion. It flows quite well.

My take on the subject is that:

1) On most human characteristics, 40-60% of the variation is accounted for by genes or some other biological factor.

2) We have very little understanding of the cause of the other 40-60%, though we do have plausible hypotheses.

3) Until we accept the first point, then we will make no real progress on truly understanding the second. You cannot control for a variable if you do not accept its existence.

4) Therefore, we cannot develop policies and practices that work until we get over our bias against genetic hypotheses. It will also seriously undermine medical research.